Content from Lesson 1: Code Development & Debugging with IDEs

Last updated on 2025-05-01 | Edit this page

Overview

Questions

- What is an Integrated Development Environment (IDE) and what role does it play in software development?

- What are the common features of IDEs?

- Why is debugging important, and what are the main techniques developers use to debug their code?

- How can you use a debugger in an IDE like Visual Studio Code to find and fix errors in your code?

Objectives

- Define what an Integrated Development Environment (IDE) is and describe its role in the software development process

- Identify common features of IDEs and explain how they support efficient code writing, debugging, and software project management

- Explain the importance of debugging and list common debugging techniques

- Demonstrate how to use a debugger within an IDE like Visual Studio Code

- Recognise the benefits of using an IDE for improving code quality, reducing errors, and accelerating software development

This session provides an introduction to Integrated Development Environments (IDEs), powerful tools for software development. We will explore how various features built into IDEs can streamline your software development workflow, especially through their built-in debugging tools — which allow you to identify and fix issues with your code efficiently.

What is an Integrated Development Environment (IDE)?

An Integrated Development Environment (IDE) is a graphical application that provides a comprehensive workspace for writing, editing, testing, and debugging code—all in one place. At the core of an IDE is a code editor, and it combines several tools that developers need into a single interface to streamline the code development process. IDEs are extremely useful and modern software development would be very hard without them.

Historically, developers typically wrote code using simple text editors, often terminal-based with no graphical interface or syntax checking support. They had to rely on separate tools outside the editor to compile, debug, and manage their code, making development a much more fragmented experience. It is worth noting that popular terminal-based editors such as Emacs and Vim may appear deceptively simple at first glance - but they are, in fact, highly powerful and customisable frameworks for coding and automating development workflows.

Today, although some IDEs are designed for specific programming languages, many modern IDEs can support multiple languages through a wide variety of plugins — often created and maintained by the developer community.

Why use an IDE?

An IDE brings everything you need to write, test, and debug code into one place — saving time by helping you write better code faster. IDEs help by:

- reducing setup and development time - everything you need for editing, running, and debugging code is in one place and the need to switch between different tools/applications/windows is significantly reduced

- offering helpful tools like syntax checking, code suggestions and autocomplete, and error checking leading to fewer errors thanks to real-time feedback and intelligent suggestions

- making it easier to debug and test code leading to easier issue detection and fixing

- providing a consistent environment across projects

For beginners, IDEs lower the barrier to entry by making it easier to spot mistakes and understand code structure. For experienced developers, IDEs boost productivity and streamline complex workflows.

Common IDE Features

Let’s look at what most IDEs offer - these features all work together to make your life easier when coding:

- Code editor with syntax highlighting and automatic code formatting for better readability and consistency

- Intelligent code completion that suggests syntactically correct options as you type to speed up development

- Powerful search tools to quickly locate functions, classes, or variables

- Inline documentation and reference lookup to understand symbol (functions, parameters, classes, fields, and methods) and variables definitions and usage without leaving your code

- Built-in support for running and managing tests through integrated testing frameworks

- Seamless integration with version control systems (like Git) for tracking changes and collaboration

- Debugging tools for setting breakpoints, stepping through code, and inspecting variables during runtime

- An integrated terminal for executing commands directly within the IDE

- A project/file explorer for easy navigation and management of your software project

Some IDEs also offfer:

- Deployment tools to package and release your code efficiently

- Basic project and issue tracking features to support task management

Popular IDEs

Here are a few widely used IDEs across different languages and fields:

- Visual Studio Code (VS Code) – lightweight and highly customisable; supports many languages

- PyCharm – great for Python development

- RStudio – tailored for R programming but allows users to mix (R Markdown) text with code in R, Python, Julia, shell scripts, SQL, Stan, JavaScript, C, C++, Fortran, and others, similar to Jupyter Notebooks.

- Eclipse – often used for Java and other compiled languages

- JupyterLab – interactive environment for Python and data science

- Spyder – popular among scientists using Python

What is Code Debugging?

Now, what happens when your code does not work as expected? That is where code debugging comes in. Debugging means finding, understanding, and fixing errors in your code — which can manifest as unexpected behavior, crashes, or incorrect outputs. Debugging is an essential step in software development, ensuring that your code runs as intended and meets its requirements (and quality standards).

Why Debugging Matters?

Debuggin ensures your code behaves as it should and helps you find the root cause of problems — not just guess - when it does not. Even small mistakes in code can cause unexpected behavior or crashes. Debugging helps with:

- code correctness - to ensure your program works as expected and meets requirements

- error resolution - to help you understand why your code is not performing correctly, allowing you to find and fix issues that make your program behave incorrectly

- improving code quality - regular debugging leads to cleaner, more reliable and performant code and reduces the risk of problems in production

- efficient code development - familiarity with debugging tools and techniques can significantly reduce the time spent on troubleshooting and enhance overall productivity.

Debugging is a normal part of the code development process - it is not just about fixing mistakes — it is about understanding your code better.

Common Debugging Techniques

Let’s be real — everyone’s code breaks sometimes. Debugging is just part of the game. For starters, you can try rubber duck debugging - a technique where you explain your code, line by line, out loud — to a colleague or to an inanimate object like a rubber duck. The idea is that by forcing yourself to verbalise what your code is supposed to do, you slow down and think more clearly about each part, which often helps you spot mistakes or logical errors you might have missed when just reading the code silently.

In addition to talking to a rubber duck (which is surprisingly effective), one of the simplest tricks is adding print statements to your code: just printing out variable values or messages at key points can quickly show you where things start to go wrong.

Logging is another smart move, especially for bigger projects, because it helps you track what your program is doing over time and help diagnose issues that occur in specific runtime conditions. A variant of logging is to use assert statements in your code -

But if you want to level up, using a built-in debugger (like the one in VS Code) is a game-changer — you can set breakpoints, step through your code line by line, and actually see what is happening in real time.

And if you really want to catch problems early, writing tests to check that your code behaves properly can save you from bigger headaches later.

Practical Work

In the rest of this session, we will walk you through how to use a debugger in VS Code, focusing on practical steps and tips to help you find and fix errors more efficiently in your code. It is easier than you think and can really save you time.

- Integrated Development Environments (IDEs) are all-in-one tools for writing, editing, testing, and debugging code, improving developer efficiency by reducing the need to switch between different applications.

- Common IDE features include code editing, syntax highlighting, code completion, version control integration, debugging tools, project navigation, and built-in terminals.

- Debugging is the process of finding and fixing bugs in code to ensure it behaves as intended, improving code quality and reliability.

- Common debugging techniques include adding print statements, using built-in debuggers to set breakpoints and inspect variables, writing tests, and using logging.

- Using an IDE for debugging allows developers to step through their code interactively, making error detection and resolution much faster and more effective.

Content from 1.1 Setup & Prerequisites

Last updated on 2025-04-30 | Edit this page

Overview

Questions

- What prerequiste knowledge is required to follow this topic?

- How to setup your machine to follow this topic?

Objectives

- Understand what prerequiste knowledge is needed before following this topic

- Setup your machine to follow this topic

- Shell with Git version control tool installed and the ability to navigate filesystem and run commands from within a shell

- Python version 3.8 or above installed

- Understanding of Python syntax to be able to read code examples

- Visual Studio Code installed (ideally the latest version)

Setup

Shell with Git

On macOS and Linux, a bash shell will be available by default.

If you do not have a bash shell installed on your system and require assistance with the installation, you can take a look at the instructions provided by Software Carpentry for installing shell and Git.

Python

Python version 3.8 or above is required. Type python -v

at your shell prompt and press enter to see what version of Python is

installed on your system. If you do not have Python installed on your

system and require assistance with the installation, you can take a look

at the

instructions provided by Software Carpentry for installing Python in

preparation for undertaking their Python lesson.

VS Code

The hands-on part of this topic will be conducted using Visual Studio Code (VS Code), a widely used IDE. Please download the appropriate version of Visual Studio Code for your operating system (Windows, macOS, or Linux) and system architecture (e.g., 64-bit, ARM).

Content from 1.2 Getting Started with VSCode

Last updated on 2025-10-24 | Edit this page

Overview

Questions

- How do I access the key features of Microsoft Visual Studio (VS) Code?

- How do I open a software project in VSCode?

- What are VSCode extensions, and how do I use them?

Objectives

- Describe the general layout of the VSCode interface

- Download or clone an existing remote GitHub repository

- Open a code folder in VSCode using the explorer feature

- Install and configure an extension to VSCode that helps with Python code development

Running VSCode

Let’s start by running VSCode now on our machines, so run it now. How you run VSCode will differ depending on which operating system you have installed.

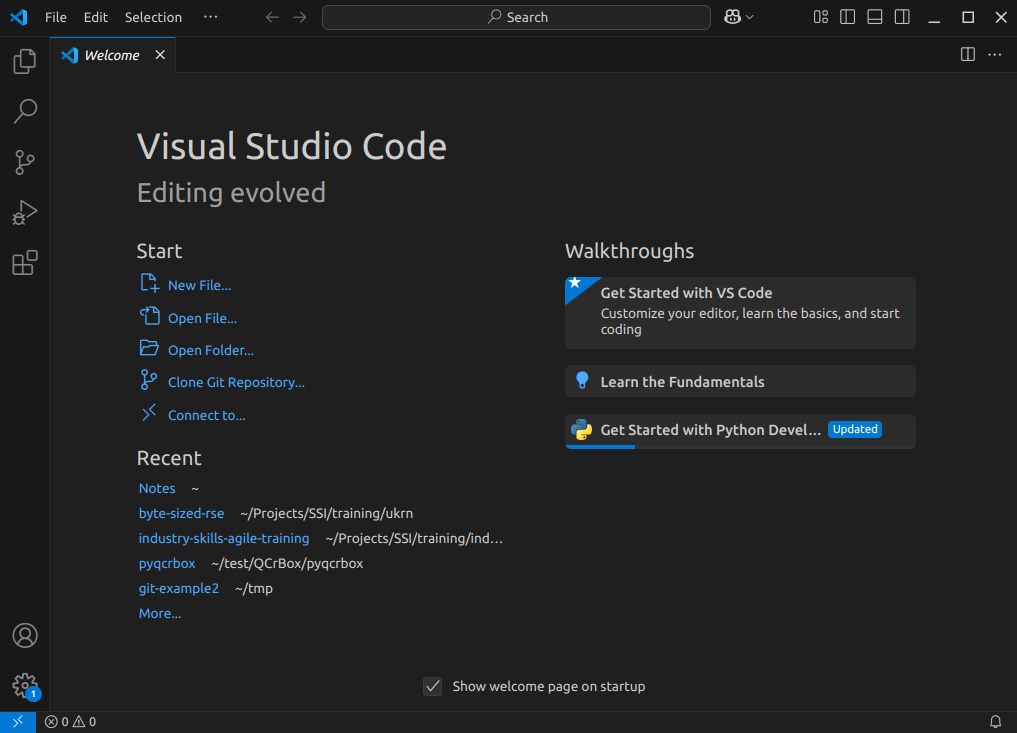

The first thing you’ll likely see is a welcome-style page with links to features for opening files, and creating or opening a project. You may find it asks you which kind of theme you’d like - you can select from either a dark or light theme.

Navigating Around VSCode

So let’s take a look at the application. You’ll see some icons on the left side, which give you access to its key features. Hovering your mouse over each one will show a tooltip that names that feature:

Explorer- the top one is a file navigator, or explorer - we can use this to open existing folders containing program files.Search- the next one down is a search capability, so you can search for things (and replace them with other text) over your code files.-

Source control- this gives you access to source code control, which includes Git version control functionality. This feature means you can do things like clone Git repositories (for example, from GitHub), add and commit files to a repository, things like that.CalloutIf you’re not familiar with Git, that’s totally fine - you don’t have to use this feature, although it’s worth looking into using version control for writing your code. Version control Systems like Git allow you to manage your code by storing it - and all the changes you make to it - within a repository hosted elsewhere, for example, on GitHub.

Run and Debug- this allows you to run programs you write in a special way with a debugger, which allows you to check the state of your program as it is running, which is very useful and we’ll look into later.Extensions- which we’ll look into right now, allows you to install extensions to VSCode to extend its functionality in some way.

There are many other features and ways to access them, and we’ll cover key ones throughout this lesson.

Installing Extensions

Extensions are a major strength of VSCode. Whilst VSCode appears quite lightweight, and presents a simple interface (particularly compared to many other IDEs!), this is quite deceptive. You can extend its functionality in many different ways. For example, installing support for other languages, greater support for version control, there’s even support for working with databases, and so on. There are literally tens of thousands of possible extensions now.

Now VSCode already comes with built-in support for JavaScript, including TypeScript and node.js, but also has extensions for other languages too (C++, C#, Java, PHP, Go, and many others). Installing a language extension will allow you to do more things with that particular language in VSCode, as we’ll see now.

Let’s install an extension now:

- Firstly, select the extensions icon, then type in “Python” into the search box at the top, and it’ll give you a list of all Python-related extensions.

- Select the one which says

Pythonfrom Microsoft. This is the Microsoft official Python extension. - Then select

Install.

It might take a minute - you can see a sliding blue line in the top left to indicate it’s working. Once complete, you should see a couple of “Welcome” windows introducing you to two of its key features - support for Python and Jupyter notebooks. If you use Jupyter notebooks, which is a way of writing Python programs that you can run line by line from within an editor as you write the program, you may find this useful.

For now, let’s configure this extension for our Python development,

and to do that, we need to tell VSCode which Python installation on our

machine we’d like it to use. In the Python Welcome window, select

Select a Python interpreter, and then

Select Python interpreter. You may find you have many

installations of Python, or only have one. Try to select the version

later than 3.8 if you can. Then select Mark done, and close

the Welcome window.

A Sample Project

Next, let’s obtain some example Python and edit it from within

VSCode. First, download the example code we’ll use from https://github.com/UNIVERSE-HPC/code-style-example/releases/tag/v1.0.0,

either as a .zip or .tar.gz compressed archive

file. If you’re unsure, download the .zip file. Then,

extract all the files from the archive into a convenient location. You

should see files contained within a new directory named

code-style-example-1.0.0.

Now we need to load the code into VSCode to see it. You can do this in a couple of ways, either:

- Select the

Source controlicon from the middle of the icons on the left navigation bar. You should see anOpen Folderoption, so select that. - Select the

Fileoption from the top menu bar, and selectOpen Folder....

In either case, you should then be able to use the file browser to

locate the directory with the files you just extracted, and then select

Open. Note that we’re looking for the folder that

contains the files, not a specific file.

What about using Git Version Control?

If your system has the Git version control system installed, you may

see a Clone Repository option here too. If you are familiar

with Git and wish to use this option instead, select it and enter the

repository’s location as

https://github.com/UNIVERSE-HPC/code-style-example. Then

use the file browser that is presented to find a convenient location to

store the cloned code and click on

Select as Repository Destination, then select

Open when ‘Would you like to open the cloned repository?’

popup appears.

You’ll then likely be presented with a window asking whether you trust the authors of this code. In general, it’s a good idea to be at least a little wary, since you’re obtaining code from the internet, so be sure to check your sources! Be careful here - I found on Windows the “Trust” option appears on the left, whilst on Mac, it appears on the right! In this case, feel free to trust the repository! You’ll then see the explorer present you with some files in a small window (or pane) on the left you can use to navigate and find files.

So far within VSCode we have downloaded some code from a repository and opened a folder. Whenever we open a folder in VSCode, this is referred to as a “Workspace” - essentially, a collection of a project’s files and directories. So within this workspace, you’ll see the following:

- A

datafolder, containing a single data file (click on it to see the data file within it). - Two files, a

climate_analysis.pyPython file, and aLICENSE.mdfile

So next, let’s look at editing code.

- Key VSCode features are accessible via the left navigation bar and the menu

- VSCode’s capabilities can be increased by installing extensions

- Language-specific support is available via extensions

- A VSCode “workspace” is a project that consists of a collection of folder and files

- Git source code repositories on GitHub can be cloned locally and opened from within VSCode

Content from 1.3 Using the Code Editor

Last updated on 2025-10-24 | Edit this page

Overview

Questions

- How do I open a source code file in VSCode?

- What editing features will help me when writing code?

Objectives

- Use syntax highlighting to identify code styling issues and common coding mistakes

- Use code completion to automate finishing an incomplete code statement

- Use an extension to help with writing Python docstrings

- Describe how VSCode highlights the status of files managed under version control

Now we’ve acquainted ourselves with running VSCode, let’s take a look

at our example code. Select the climate_analysis.py file in

the explorer window, which will bring up the contents of the file in the

code editor.

The File Explorer has Disappeared!

You may find, perhaps on reopening VSCode, that the explorer is no

longer visible. In this case, you can select Explorer from

the sidebar to bring it back up again, and if you don’t currently have a

workspace loaded, you can select Open Folder to select the

code folder.

Note that as an example, it’s deliberately written to have flaws. Things like the line spacing is inconsistent, there are no code comments, there’s a variable that’s not used, and you may spot other issues too. But in essence, the code is designed to do the following:

- Open a file in the CSV (comma separated value) format

- Go through the file line by line, and:

- If the line begins with a

#symbol, ignore it. - Otherwise, extract the fourth column (which contains temperature in Fahrenheit), convert it to Celsius and Kelvin, and output those readings.

- If the line begins with a

Let’s take a look at some of what the code editor gives us.

Syntax Highlighting

You’ll notice that the Python syntax is being highlighted for us, which helps readability.

Here, it uses colour to distinguish the various parts of our program. Functions are yellow, Python statements are purple, variables are light blue, and strings are this reddy-orange, and so on. Which, perhaps unsurprisingly, is a feature known as Syntax Highlighting, and it’s possible to edit the colour scheme to your liking for a particular language if you like, although we won’t go into that now.

This is really handy to give you immediate feedback on what you are

typing, and can help you to identify common syntax mistakes. For

example, deleting the closing parenthesis on open - the

opening one goes red, with a squiggly line underneath, indicating an

issue.

So this is great, and helps us understand what we are writing, and highlights some mistakes.

Code Completion

Something that’s also useful is VSCode’s ability (via the Python and Pylance extensions) to help you write and format your code whilst you’re typing.

For example, on a blank line somewhere, enter

for x in something:.

On the next line, we can see that it’s automatically indented it for us, knowing that we’re inside a loop.

Another really helpful feature is something known as code completion

(in VSCode, this is referred to as IntelliSense). This is a great time

saver, and a really useful feature of IDEs. Essentially, as you type, it

works out the context of what you are doing, and gives you hints. For

example, if we start typing a variable we’ve already defined, for

example climate_data, we can see that it’s zeroing in as we

type on the options for what we might be trying to type. When we see

climate_data, we can press Tab to complete it

for us. As another example, if we wanted to open another file, we might

type new_file = open(. In this case, it provides

information on the file open function and its arguments,

along with a description of what it does. This is really handy to we

don’t have to take the time to look up all this information up on the

web, for example.

Need a Thing? Install an Extension!

As we just saw, included in the list of issues with our code was the lack of docstrings. If we want to write good code, we should be adding code comments, including docstrings for our functions, methods, and modules.

Let’s try and find an extension that might help us with writing

docstrings. Select the Extensions icon, and type

docstring - you should see an autoDocstring

extension by Nils Werner at the top. Select that, and you’ll see a page

outlining what it is Also note via the number of downloads that it’s

very widely used.

What’s really handy is the little video that shows us what it does

This looks exactly like what we’re after! Select

Install.

Now, when we go to a function for example FahrToCelsius,

go to the next line, and add """, we’ll see a small pop-up

to add a docstring. Press Tab to do so.

It does all the hard work of adding in the structure of a docstring for us, so we just need to fill in the blanks. This is another good example of us realising it would be nice to have something to help us, searching for an extension, and trying it out.

Using a Git Code Repository?

For those of you familiar with version control and who retrieved the example code via cloning its repository instead of downloading it, there are some other editor features that help with using version control. One of these is that the filename changes colours in the file explorer depending on its status within version control:

- White - an existing file is unchanged from the copy in the local repository).

- Orange - the content of an existing file has changed, and the change(s) have not been tracked by version control yet.

- Green - a new file has been added and is unknown to version control.

So at a glance, you can get an idea of what’s changed since your last commit.

Summary

So in summary, many of these editing features are typical of IDEs in general, and the great thing is that they are really helpful at saving us time. Things like syntax highlighting, code completion, automatic code formatting and inserting docstrings, may not seem like much, but it all adds up!

- IDEs typically have a host of features that help save time when writing code

- Syntax highlighting gives you immediate feedback of potential issues as you write code

- Code completion helps to automatically finish incomplete code statements and names

Content from 1.4 Running and Debugging Code

Last updated on 2025-10-24 | Edit this page

Overview

Questions

- How do I run code in VSCode?

- How do I use a debugger to locate the source of a problem in my code?

- How does debugging fit within the broader process of development?

Objectives

- Use VSCode to run a Python script and have any text output displayed within a terminal

- Add a debugging breakpoint to a Python script

- Run a debugger so it pauses program execution at a breakpoint

- Use the debugger to step through our code statement by statement

- Use debugging information to identify the cause of a problem in our code

Running Python in VSCode

Now let’s try running a Python script. First, make sure your Python code doesn’t have any errors! Then, select the “Play”-looking icon at the top right of the code editor.

You should see the program run, and output displayed in a pop-up terminal window at the bottom:

OUTPUT

steve@laptop:~/code-style-example$ /bin/python3 /home/steve/code-style-example/climate_analysis.py

Max temperature in Celsius 14.73888888888889 Kelvin 287.88888888888886

Max temperature in Celsius 14.777777777777779 Kelvin 287.92777777777775

Max temperature in Celsius 14.61111111111111 Kelvin 287.76111111111106

Max temperature in Celsius 13.838888888888887 Kelvin 286.9888888888889

Max temperature in Celsius 15.477777777777778 Kelvin 288.62777777777774

Max temperature in Celsius 14.972222222222225 Kelvin 288.1222222222222

Max temperature in Celsius 14.85 Kelvin 288.0

Max temperature in Celsius 16.33888888888889 Kelvin 289.4888888888889

Max temperature in Celsius 16.261111111111113 Kelvin 289.4111111111111

Max temperature in Celsius 16.33888888888889 Kelvin 289.4888888888889

steve@laptop:~/code-style-example$ Error:

the term conda is not recognised

If you’re running an Anaconda distribution of Python on Windows, if you see this error it means that VSCode is not looking in the right place for Anaconda’s installation. In this case, you may need to configure VSCode accordingly.

VSCode has a sophisticated method to access it’s inner functionality

known as the Command Palette, which we’ll use to address this. Activate

the Command Paletter by pressing Ctrl + Shift

+ P simultaneously, then type

Terminal: Select Default Profile. From the options, select

Command Prompt C:\WINDOWS\..., and hopefully that should

resolve the issue.

The pop-up window is known as the “Console”, and essentially is a

terminal, or command prompt, where the program is run. You’ll notice we

can also type in commands here too. For example in Windows, you could

type dir, on Mac or Linux you could type ls -

to get a listing of files, for example.

We can also close this terminal/console at any time, and start a new

one by selecting Terminal from the menu and selecting

New Terminal. So when we write and run our code, we have

the option of never having to leave VSCode at all for most things.

Debugging Code

Now finally, let’s look at a feature with IDEs which is often overlooked, that of the debugger.

A debugger is a bit like performing exploratory surgery on a patient. You know there’s something wrong, but you don’t know exactly where the problem resides. What’s useful with debuggers is that you go looking within the codebase as it’s actually running to find the source of the problem.

In order to run a debugging session we first need to tell the IDE where we’d like to examine the code. Then you run the code in a special way, using a debugger, and it pauses the execution of the code at that point. You then have the freedom to take a look around and examine the state of variables, which functions have been called up until this point, and so on, and hopefully identify the cause of the issue.

Now, many people when starting out with coding disregard debuggers as complicated and tough to understand. And 30 or 40 years ago, debuggers were indeed quite complicated to set up and use. But these days, debuggers are perhaps a little more straightforward, with IDEs doing a lot of complex stuff for us.

Introducing a Problem

Let’s assume we have a problem with our code - by introducing one. In

our climate_analysis.py code, where it says

if data[0][0] != COMMENT, replace COMMENT with

'!'. We perhaps might assume one of our colleagues

erroneously made this change, but we haven’t spotted it yet. We try to

run the code as before, and now it doesn’t work. We get a

ValueError, which informs us it couldn’t perform a

conversion of a value extracted from the data file to a float as part of

its temperature conversion.

Adding a Debugging Breakpoint

Now we know where the error is occurring but we don’t know the source

of the problem, which may not be in the same place. So let’s add in what

is known as a breakpoint to our code. This is where the

debugger will stop running the code and pause for us Let’s add it at the

start of the for line in climate_data: line. We do that by

clicking in the left margin for that line. By hovering in the margin,

you’ll see a faded red dot appear. Select it on that line and this sets

the breakpoint.

Using the Debugger

Let’s run the code using the debugger. Select the

Run and Debug icon on the left, and select

Run and Debug. Then it will likely ask two questions in

pop-up pane near the top:

- It asks you to

Select debugger, so select the suggestedPython Debugger. - Then it asks you to

Select a debug configuration, so selectPython Fileto debug the current file.

Now the Python script is running in debug mode. You’ll see the execution has paused on the line we entered the breakpoint, which is now highlighted., Some new information is now displayed in various panes on the left of the code editor. In particular:

-

VARIABLES- on the left, we can see a list of variables, and their current state, at this point in the script’s execution, such asCOMMENTandSHIFT, andclimate_data(which is a reference to our open data file). We don’t have many at the moment. It also distinguishes between local variables and global variables - this is to do with the “scope” of the variables, as to how they are accessible from this point in the running of the code. Global variables can be seen from anywhere in the script. And local variables are those that are visible from this point of the program. If we were within a function here, we would see variables that are defined and only used within that function as local variables only. For example, if we set a breakpoint within theFahrToKelvinfunction, we would seekelvinas a local variable, but it wouldn’t be listed as a global variable. -

CALL STACK- this is a record of the journey the script has taken, in terms of functions called, to get to this position in its execution. It shows us that we are at the top level of our script, which makes sense, since our breakpoint is at the top level of script, and not within any function. If it were within theFahrToKelvinfunction, for example, we’d see that added to the call stack. It also shows us the line number where execution has paused at this level of the call stack.

Now, we can also see some new icons at the top to do with debugging:

- The first one is continue, which allows the script to keep running until the next breakpoint.

- The next one allows us to step over - or through - the script one statement at a time.

- The next two allow us to choose to step into or out of a function call, which is interesting.

If we want to examine the inner workings of a function during this debug session, we can do that.

- The green cycle one is to restart the debug process.

- The red cross stops debugging completely.

So let’s step through our code by selecting the second icon and see

what happens. As we do so, we can see the variable state changing. By

looking in the variables section, we can see that the line

variable contains the first line read from the data file. On the next

step, we’ve reached the if statement. If we step again, and

then again, our program halts because it’s run into the problem we saw

before.

This tells us something useful - that the problem occurs in the first

iteration of the loop. So, this implies, the problem might be with the

first line of data being processed, since the Python code is going

through the data file line by line. If we re-run the debugger, we can go

through this process again. And we can see something interesting when we

get to the if statement. From the code, we know that the if

statement is looking for an exclamation mark at the beginning of the

line to indicate a comment. However, the data variable contains a ‘#’ as

the first character instead. Therefore, in this case, the code will

assume it’s a data line and attempt to process it as such. And then it

will fail with the exception we saw before.

Fixing the Issue

So now we’ve identified the problem, we can fix it.

Firstly, stop the debug process by selecting the red square. Then

edit the if line to search for COMMENT

instead, reverting the code to what it was before. We can then rerun the

debugger if we wish, to check our understanding. And as we step through

the code, we can see if correctly identifies the first line as a

comment, and ignores it, continuing to the iteration of the for loop,

and the next line of data. Now we have our solution fixed, we can stop

the debugger again.

We’ve now solved our problem, so we should remove the breakpoint. Running our code again as normal, we can see it now works as expected.

Debugging in Context

Typically, we’d use debugging when we’ve discovered a problem. Other techniques, such as testing, are great at identifying that there are problems, but not always the root cause and location of the actual problem. Debugging is the next step of that process. Sometimes, we discover a problem - perhaps our code testing show us there’s an issue, or maybe we find out some other way. If we’re lucky, we can identify and fix the problem quickly. Where we can’t, debugging is there to help us. With particularly complex programs, it can be very difficult to reason about how they work, and where the problem are, and debugging allows us to pick apart that process, and step by step, help us find the source of those issues.

- Run a script by selecting the “Play” icon in VSCode

- Debugging allows us to pause and inspect the internal state of a program while it is running

- Specify the points a debugger should pause by adding breakpoints to specific lines of code

- When a breakpoint is reached, a debugger typically shows you the current variables and their values and the stack of functions called to reach the current state

- Debuggers typically allow us to: step through the code a statement at a time, step into or out of a function call if we need further debugging information regarding that function, and continue execution until another breakpoint is reached or the end of the program

- Testing is used to identify the existence of a problem, whilst we use debugging to locate the source of a problem

Content from Lesson 2: Code Style, Quality & Linting

Last updated on 2025-05-01 | Edit this page

Overview

Questions

- Why does consistent code style matter in software development?

- What are some common code styling practices and conventions?

- How can poor coding style lead to bugs and maintenance issues?

- What is a linter, and how does it help improve code quality?

Objectives

- Understand why consistent code style is important for collaboration and long-term maintainability.

- Identify key code style practices and how they vary between programming languages.

- Recognise how maintaining good code style can reduce bugs and improve code quality.

- Explain what a linter is and describe its role in detecting errors and enforcing style.

This session introduces the importance of code style and linting in writing clean, consistent, and maintainable code. You will learn how following a defined style guide improves code readability and collaboration, and how automated tools, known as linters, can help identify and fix style issues early in the development process. We will explore common linting tools and how to integrate them into your software development workflow.

Introduction to Code Style

Why Does Code Style Matter?

Software development is inherently a collaborative activity. Even if you do not currently intend for anyone else to read your code, chances are someone will need to in the future — and that person might even be you, months or years later. By following and consistently applying code styling guidelines, you can significantly improve the readability and maintainability of your code. Consistency plays a vital role in this process. Adopting a clear set of style guidelines not only helps you write uniform code but also makes it easier to switch between projects. This is especially important when working as part of a team, where shared understanding and clarity are essential.

Key Code Style Practices & Conventions

Styling practices and conventions play a key role in writing readable and maintainable code, but they can vary significantly between programming languages. These conventions generally cover aspects such as line length, line splitting, the use of white space, naming conventions for variables, functions, and classes, as well as indentation and commenting styles (where not enforced by the language itself).

It is important to note that programmers often have strong and differing opinions about what constitutes good style. For example, many style guides recommend a maximum line length of 80 characters, a convention that dates back to older hardware and terminal limitations. While some developers continue to find this helpful for readability and side-by-side editing, others argue that it feels unnecessarily restrictive on modern screens. Despite these differences, adopting and adhering to a consistent style within a project helps ensure clarity and makes collaboration smoother.

There are many established code style guides tailored to specific programming languages, such as:

- PEP8 and Google Style Guide for Python

- Google C++ Style Guide and C++ Core Guidelines for C++

- Airbnb JavaScript Style Guide and Google JavaScript Style Guide and JavaScript Standard Style for JavaScript

- Go Style Guide and Go Styleguide for Go.

Maintaining Code Quality to Reduce Bugs

Poor coding style can lead to bugs and maintenance issues because it makes code harder to read, understand, and debug. Inconsistent naming, unclear structure, and sloppy formatting can cause confusion about what the code is doing, making it easier to introduce mistakes. Many things that seem harmless and do not cause immediate syntax errors while writing code can produce logic errors, wrong results and lead to issues later on - making them especially tricky to detect and fix. Small issues like unused variables, accidental redefinitions, or incorrect scoping can go unnoticed and later cause unexpected behavior or subtle logic errors. Over time, this makes the codebase more fragile, harder to maintain, and much more difficult for others — or even your future self — to fix or extend.

Some examples of small oversights that stack up over time include:

- defining variables or importing modules or headers that that are never used can clutter the code

- using vague variable names like

dataeverywhere can make it unclear whichdatayou are actually handling, causing mistakes - bad indentation can cause logic errors — like running a block of code when you did not intend to

- variable scoping problems (e.g. reusing the same variable name in different scopes can lead to shadowing, where a local variable hides a global or outer-scope variable, resulting in unexpected values being used.

Introduction to Linters

What is a Linter and Why Use One?

A linter is a tool that performs static analysis on your code — meaning it examines the source code without running it — to detect potential errors, stylistic issues, and code patterns that might cause bugs in the future. The term originates from a 1970s tool for the C programming language called “lint”.

Linters help catch errors early and enforce consistent code style, making your code more reliable, readable, and easier to maintain. They are especially useful for improving code quality and streamlining collaboration in teams.

Practical Work

In the rest of this session, we will walk you through how to use a linting tool.

The use of linting tools is often automated through integration with Continuous Integration (CI) pipelines or pre-commit hooks available in version controlled code repositories, helping to streamline the development process and ensure code quality consistently on each commit. This is covered in a separate session.

Content from 2.1 Setup & Prerequisites

Last updated on 2025-04-30 | Edit this page

Overview

Questions

- What prerequiste knowledge is required to follow this topic?

- How to setup your machine to follow this topic?

Objectives

- Understand what prerequiste knowledge is needed before following this topic

- Setup your machine to follow this topic

- Shell with Git version control tool installed and the ability to navigate filesystem and run commands from within a shell

- Python version 3.8 or above installed

- Understanding of Python syntax to be able to read code examples

- Pip Python package installer

- Visual Studio Code installed (ideally the latest version)

Setup

Shell with Git

On macOS and Linux, a bash shell will be available by default.

If you do not have a bash shell installed on your system and require assistance with the installation, you can take a look at the instructions provided by Software Carpentry for installing shell and Git.

Python

Python version 3.8 or above is required. Type python -v

at your shell prompt and press enter to see what version of Python is

installed on your system. If you do not have Python installed on your

system and require assistance with the installation, you can take a look

at the

instructions provided by Software Carpentry for installing Python in

preparation for undertaking their Python lesson.

Pip

Pip Python package should come together with your Python

distribution. Try typing pip at the command line and you

should see some usage instructions for the command appear if it is

installed.

VS Code

The hands-on part of this topic will be conducted using Visual Studio Code (VS Code), a widely used IDE. Please download the appropriate version of Visual Studio Code for your operating system (Windows, macOS, or Linux) and system architecture (e.g., 64-bit, ARM).

Content from 2.2 Some Example Code

Last updated on 2025-10-24 | Edit this page

Overview

Questions

- Why should I write readable code?

- What is a “code smell”?

Objectives

- Obtain and run example code used for this lesson

- List the benefits of writing readable code

- Describe the key indicators of a “bad code smell”

Obtaining Example Code

For this lesson we’ll be using some example code available on GitHub, which we’ll clone onto our machines using the Bash shell. So firstly open a Bash shell (via Git Bash in Windows or Terminal on a Mac). Then, on the command line, navigate to where you’d like the example code to reside, and use Git to clone it. For example, to clone the repository in our home directory, and change our directory to the repository contents:

Examining the Code

Next, let’s take a look at the code, which is in the root directory

of the repository in a file called climate_analysis.py.

PYTHON

import string

shift = 3

comment = '#'

climate_data = open('data/sc_climate_data_10.csv', 'r')

def FahrToCelsius(fahr):

celsius = ((fahr - 32) * (5/9))

return celsius

def FahrToKelvin(fahr):

kelvin = FahrToCelsius(fahr) + 273.15

return kelvin

for line in climate_data:

data = line.split(',')

if data[0][0] != comment:

fahr = float(data[3])

celsius = FahrToCelsius(fahr)

kelvin = FahrToKelvin(fahr)

print('Max temperature in Celsius', celsius, 'Kelvin', kelvin)The code is designed to process temperature data from a separate data file. The code reads in data line by line from the data file, and prints out fahrenheit temperatures in both celsius and kelvin.

The code expects to find the data file

sc_climate_data_10.csv (formatted in the Comma Separated

Value CSV format) in the data directory, and looks like

this:

# POINT_X,POINT_Y,Min_temp_Jul_F,Max_temp_jul_F,Rainfall_jul_inch

461196.8188,1198890.052,47.77,58.53,0.76

436196.8188,1191890.052,47.93,58.60,0.83

445196.8188,1168890.052,47.93,58.30,0.74

450196.8188,1144890.052,48.97,56.91,0.66

329196.8188,1034890.052,49.26,59.86,0.78

359196.8188,1017890.052,49.39,58.95,0.70

338196.8188,1011890.052,49.28,58.73,0.74

321196.8188,981890.0521,48.20,61.41,0.72

296196.8188,974890.0521,48.07,61.27,0.78

299196.8188,972890.0521,48.07,61.41,0.78It contains a number of lines, each containing a number of values, each separated by a comma. There’s also a comment line at the top, to tell us what each column represents.

Now let’s take a look at the Python code, using any text or code

editor you like to open the file. You can also use nano if

you’d prefer to use the command line, e.g.

The code opens the data file, and also defines some functions to do two temperature conversions from Fahrenheit to Celsius and Fahrenheit to Kelvin. Note that for the purposes of this lesson, the code is deliberately written to contain some issues!

Why Write Readable Code?

Readable Code

As a group, answer the following questions:

- Who has seen or used code that looks like this?

- Who has written code like this?

No one writes great code that’s readable, well formatted, and well designed all the time. Sometimes you often need to explore ideas with code to understand how the code should be designed, and this typically involves trying things out first. But… the key is that once you understand how to do something, it’s a good idea to make sure it’s readable and understandable by other people, which may include a future version of yourself, 6 months into the future. So it’s really helpful to end up with good clean code so yit’s easier to understand.

Another key benefit to writing “cleaner” code is that its generally easier to extend and otherwise modify in the future. When code is initially written it’s often impossible to tell if it will be reused in some way elsewhere. A familiar scenario is that you stop developing a piece of code for a while, and put it to one side. Maybe it’s not needed any more, or perhaps a project has finished. You forget about it, until suddenly, there’s a need to use the code again. Maybe all of it needs to be reused in another project, or maybe just a part of it. However, you come back to your code, and it’s a mess you can’t understand. But by spending a little time now to write good code while you understand it, you can save yourself (and possibly others) a lot of time later!

Does my Code Smell?

Developers sometimes talk about “code smells”. Code smells are cursory indications from looking at the source code that a piece of code may have some deeper issues. And looking at this code, it smells pretty terrible. For example, we can see that there is inconsistent spacing, with lines bunched together in some places, and very spread out in others. This doesn’t engender a great deal of confidence that the code will work as we expect, and it raises the question that if the style of the code appears rushed, what else has been rushed? How about the design of the code? Something to bear in mind when writing code!

Running the Example Code

Now despite the issues with the code, does it work? Let’s find out. Within the shell, in the root directory of the repository, run the code as follows:

OUTPUT

Max temperature in Celsius 14.73888888888889 Kelvin 287.88888888888886

Max temperature in Celsius 14.777777777777779 Kelvin 287.92777777777775

Max temperature in Celsius 14.61111111111111 Kelvin 287.76111111111106

Max temperature in Celsius 13.838888888888887 Kelvin 286.9888888888889

Max temperature in Celsius 15.477777777777778 Kelvin 288.62777777777774

Max temperature in Celsius 14.972222222222225 Kelvin 288.1222222222222

Max temperature in Celsius 14.85 Kelvin 288.0

Max temperature in Celsius 16.33888888888889 Kelvin 289.4888888888889

Max temperature in Celsius 16.261111111111113 Kelvin 289.4111111111111

Max temperature in Celsius 16.33888888888889 Kelvin 289.4888888888889And we can see that the code does indeed appear to work, with Celsius and Kelvin values being printed to the terminal. But how can we improve its readability? We’ll use a special tool, called a code linter, to help us identify these sorts of issues with the code.

- No one writes readable, well designed and well formatted code all the time

- Writing clear and readable code helps others - as well as yourself in the future - to understand, modify and extend your code more easily

- A code smell is a cursory indication that a piece of code may have underlying issues

Content from 2.3 Analysing Code using a Linter

Last updated on 2025-10-23 | Edit this page

Overview

Questions

- What tools can help with maintaining a consistent code style?

- How can I keep dependencies between different code projects separate?

- How can we automate code style checking?

Objectives

- Use Pylint to verify a program’s adherence to an established Python coding style convention

- Describe the benefits of a virtual environment

- Create and use a virtual environment to manage Python dependencies separately for our example code

- Install the Pylint static code analysis tool as a VSCode extension

- Use the Pylint extension to identify deeper potential issues and errors

- List the various types of issue messages that are output from Pylint

- Fix an issue identified by Pylint and re-run Pylint to ensure it is resolved

Installing a Code Linter

The first thing we need to do is install Pylint, a very well established tool for statically analysing Python code.

Now fortunately, Pylint can be installed as a Python package, and we’re going to create what’s known as a virtual environment to hold this installation of Pylint.

Installing Python Packages

Who has installed a Python package before, using the program

pip? Who has created and used a Python virtual environment

before?

Benefits of Virtual Environments

Virtual environments are an indispensible tool for managing package dependencies across multiple projects, and could be a whole topic itself. In the case of Python, the idea is that instead of installing Python packages at the level of our machine’s Python installation, which we could do, we’re going to install them within their own “container”, which is separate to the machine’s Python installation. Then we’ll run our Python code only using packages within that virtual environment.

There are a number of key benefits to using virtual environments:

- It creates a clear separation between the packages we use for this project, and the packages we use other projects.

- We don’t end up with a machine’s Python installation containing a clutter of a thousand different packages, where determining which packages are used for which project often becomes very time consuming and prone to error.

- Since we are sure what our code actually needs as dependencies, it becomes much easier for someone else (which could be a future version of ourselves) to know what these dependencies are and install them to use our code.

- Virtual environments are not limited to Python; for example there are similar tools for available for Ruby, Java and JavaScript.

Setting up a Virtual Environment

Let’s now create a Python virtual environment and make use of it. Make sure you’re in the root directory of the repository, then type

Here, we’re using the built-on Python venv module -

short for virtual environment - to create a virtual environment

directory called “venv”. We could have called the directory anything,

but naming it venv (or .venv) is a common

convention, as is creating it within the repository root directory. This

makes sure the virtual environment is closely associated with this

project, and not easily confused with another.

Once created, we can activate it so it’s the one in use:

BASH

[Linux] source venv/bin/activate

[Mac] source venv/bin/activate

[Windows] source venv/Scripts/activateYou should notice the prompt changes to reflect that the virtual environment is active, which is a handy reminder. For example:

OUTPUT

(venv) $Now it’s created, let’s take a look at what’s in this virtual environment at this point.

OUTPUT

Package Version

---------- -------

pip 22.0.2

setuptools 59.6.0We can see this is essentially empty, aside from some default packages that are always installed. Note that whilst within this virtual environment, we no longer have access to any globally installed Python packages.

Installing Pylint into our Virtual Environment

The next thing we can do is install any packages needed for this codebase. As it turns out, there isn’t any needed for the code itself, but we wish to use pylint, and that’s a python package.

What is Pylint?

Pylint is a tool that can be run from the command line or via IDEs like VSCode, which can help our code in many ways:

- Ensure consistent code style : whilst in-IDE context-sensitive highlighting such as that provided by VSCode, it helps us stay consistent with established code style standards such as (PEP 8) as we write code by highlighting infractions.

- Perform basic error detection: Pylint can look for certain Python type errors.

- Check variable naming conventions: Pylint often goes beyond PEP 8 to include other common conventions, such as naming variables outside of functions in upper case.

- Customisation: you can specify which errors and conventions you wish to check for, and those you wish to ignore.

So we can install pylint library into our virtual

environment as:

Now if we check the packages, we see:

OUTPUT

Package Version

----------------- -------

astroid 3.3.9

dill 0.3.9

isort 6.0.1

mccabe 0.7.0

pip 22.0.2

platformdirs 4.3.7

pylint 3.3.6

setuptools 59.6.0

tomli 2.2.1

tomlkit 0.13.2

typing_extensions 4.13.1So in addition to Pylint, we see a number of other dependent packages installed that are required by it.

We can also deactivate our virtual environment:

You should see the “(venv)” prefix disappear, indicating we have returned to our global Python environment. Let’s reactivate it since we’ll need it to use pylint.

Analysing our Code using a Linter

Let’s point Pylint at our code and see what it reports:

We run this, and it gives us a report containing issues it has found with the code, and also an overall score.

OUTPUT

************* Module climate_analysis

climate_analysis.py:9:35: C0303: Trailing whitespace (trailing-whitespace)

climate_analysis.py:9:0: C0325: Unnecessary parens after '=' keyword (superfluous-parens)

climate_analysis.py:1:0: C0114: Missing module docstring (missing-module-docstring)

climate_analysis.py:4:0: C0103: Constant name "shift" doesn't conform to UPPER_CASE naming style (invalid-name)

climate_analysis.py:5:0: C0103: Constant name "comment" doesn't conform to UPPER_CASE naming style (invalid-name)

climate_analysis.py:6:15: W1514: Using open without explicitly specifying an encoding (unspecified-encoding)

climate_analysis.py:8:0: C0116: Missing function or method docstring (missing-function-docstring)

climate_analysis.py:8:0: C0103: Function name "FahrToCelsius" doesn't conform to snake_case naming style (invalid-name)

climate_analysis.py:8:18: W0621: Redefining name 'fahr' from outer scope (line 20) (redefined-outer-name)

climate_analysis.py:9:4: W0621: Redefining name 'celsius' from outer scope (line 21) (redefined-outer-name)

climate_analysis.py:11:0: C0116: Missing function or method docstring (missing-function-docstring)

climate_analysis.py:11:0: C0103: Function name "FahrToKelvin" doesn't conform to snake_case naming style (invalid-name)

climate_analysis.py:11:17: W0621: Redefining name 'fahr' from outer scope (line 20) (redefined-outer-name)

climate_analysis.py:12:4: W0621: Redefining name 'kelvin' from outer scope (line 22) (redefined-outer-name)

climate_analysis.py:6:15: R1732: Consider using 'with' for resource-allocating operations (consider-using-with)

climate_analysis.py:1:0: W0611: Unused import string (unused-import)

------------------------------------------------------------------

Your code has been rated at 0.59/10 (previous run: 0.59/10, +0.00)For each issue, it tells us:

- The filename

- The line number and text column the problem occurred

- An issue identifier (what type of issue it is)

- Some text describing this type of error (as well as a shortened form of the error type)

You’ll notice there’s also a score at the bottom, out of 10. Essentially, for every infraction, it deducts from an ideal score of 10. Note that it is perfectly possible to get a negative score, since it just keeps deducting from 10! But we can see here that our score appears very low - 0.59/10, and if we were to now resolve each of these issues in turn, we should get a perfect score.

Identifying and Fixing an Issue

We can also ask for more information on an issue identifier. For example, we can see at line 9, near column 35, there is a trailing whitespace

OUTPUT

:trailing-whitespace (C0303): *Trailing whitespace*

Used when there is whitespace between the end of a line and the newline. This

message belongs to the format checker.Which is helpful if we need clarification on a particular message.

If we now edit the file, and go to line 9, column 35, we can see that there is an unnecessary space.

Let’s fix this issue now by removing the space, save the changed file, and then re-run pylint on it.

OUTPUT

------------------------------------------------------------------

Your code has been rated at 1.18/10 (previous run: 0.59/10, +0.59)And we see that the C0303 issue has disappeared and our

score has gone up! Note that it also gives us a comparison against our

last score.

As a gentle warning: it can get quite addictive to keep increasing your score, which might well be the point!

So looking at the issue identifiers, e.g. C0303, what do

the C, W, R prefix symbols

mean?

At the end, we can see a breakdown of what they mean:

-

Iis for informational messages -

Cis for a programming standards violation. Part of the code is not conforming to the normally accepted conventions of writing good code (e.g. things like variable or function naming) -

Rfor a need to refactor, due to a “bad code smell” -

Wfor warning - something that isn’t critical should be resolved -

Efor error - so pylint think’s it’s spotted a bug (useful, but don’t depend on this to find errors!) -

Ffor a fatal pylint error

So if we run it again on our code:

OUTPUT

************* Module climate_analysis

climate_analysis.py:9:0: C0325: Unnecessary parens after '=' keyword (superfluous-parens)

climate_analysis.py:1:0: C0114: Missing module docstring (missing-module-docstring)

climate_analysis.py:4:0: C0103: Constant name "shift" doesn't conform to UPPER_CASE naming style (invalid-name)

climate_analysis.py:5:0: C0103: Constant name "comment" doesn't conform to UPPER_CASE naming style (invalid-name)

climate_analysis.py:6:15: W1514: Using open without explicitly specifying an encoding (unspecified-encoding)

climate_analysis.py:8:0: C0116: Missing function or method docstring (missing-function-docstring)

climate_analysis.py:8:0: C0103: Function name "FahrToCelsius" doesn't conform to snake_case naming style (invalid-name)

climate_analysis.py:8:18: W0621: Redefining name 'fahr' from outer scope (line 20) (redefined-outer-name)

climate_analysis.py:9:4: W0621: Redefining name 'celsius' from outer scope (line 21) (redefined-outer-name)

climate_analysis.py:11:0: C0116: Missing function or method docstring (missing-function-docstring)

climate_analysis.py:11:0: C0103: Function name "FahrToKelvin" doesn't conform to snake_case naming style (invalid-name)

climate_analysis.py:11:17: W0621: Redefining name 'fahr' from outer scope (line 20) (redefined-outer-name)

climate_analysis.py:12:4: W0621: Redefining name 'kelvin' from outer scope (line 22) (redefined-outer-name)

climate_analysis.py:6:15: R1732: Consider using 'with' for resource-allocating operations (consider-using-with)

climate_analysis.py:1:0: W0611: Unused import string (unused-import)

------------------------------------------------------------------

Your code has been rated at 1.18/10 (previous run: 1.18/10, +0.00)We can see that most of our issues are do to with coding conventions.

- Virtual environments help us maintain dependencies between different code projects separately, avoiding confusion between which dependencies are strictly required for a given project

- One method to create a Python virtual environment is to use

python -m venv venvto generate a virtual environment in the current directory calledvenv - Code linters such as Pylint help to analyse and identify deeper issues with our code, including potential run-time errors

- Pylint outputs issues of different types, including informational messages, programming standards violations, warnings, and errors

- Pylint outputs an overall score for our code based on deductions from a perfect score of 10

Content from 2.4 Advanced Linting Features

Last updated on 2025-10-23 | Edit this page

Overview

Questions

- What can I do to increase the detail of Pylint reports?

- How can I reduce unwanted messages from Pylint?

- How can I use static code analysis tools within VSCode?

Objectives

- Use Pylint to produce a verbose report showing number of occurrences of encountered message types

- Fix an issue within our code to increase our Pylint score

- Specify message types to Pylint that we don’t want reported

- Install a Pylint extension into VSCode

More Verbose Reporting

We can also obtain a more verbose report by adding

--reports y to the command, which gives us a lot more

detail:

Here’s a part of that output:

OUTPUT

...

Messages

--------

+---------------------------+------------+

|message id |occurrences |

+===========================+============+

|redefined-outer-name |4 |

+---------------------------+------------+

|invalid-name |4 |

+---------------------------+------------+

|missing-function-docstring |2 |

+---------------------------+------------+

|unused-import |1 |

+---------------------------+------------+

|unspecified-encoding |1 |

+---------------------------+------------+

|superfluous-parens |1 |

+---------------------------+------------+

|missing-module-docstring |1 |

+---------------------------+------------+

|consider-using-with |1 |

+---------------------------+------------+

...It gives you some overall statistics, plus comparisons with the last time you ran it, on aspects such as:

- How many modules, classes, methods and functions were looked at

- Raw metrics (which we’ll look at in a minute)

- Extent of code duplication (none, which is good)

- Number of messages by category (again, we can see that it’s mainly convention issues)

- A sorted count of the messages we received

Looking at raw metrics, we can see that it breaks down our program into how many lines are code lines, Python docstrings, standalone comments, and empty lines. This is very useful, since it gives us an idea of how well commented our code is. In this case - not very well commented at all! For normal comments, the usually accepted wisdom is to add them to explain why you are doing something, or perhaps to explain how necessarily complex code works, but not to explain the obvious, since clearly written code should do that itself.

Increasing our Pylint Score - Adding a Docstring

Docstrings

Who’s familiar with Python docstrings?

Docstrings are a special kind of comment for a function, that explain what the function does, the parameters it expects, and what is returned. You can also write docstrings for classes, methods, and modules, but you should usually aim to add docstring comments to your code wherever you can, particularly for critical or complex functions.

Let’s add one to our code now, within the

fahr_to_celsius function.

PYTHON

"""Convert fahrenheit to Celsius.

:param fahr: temperature in Fahrenheit

:returns: temperature in Celsius

"""Re-run pylint command - can see we have one less

docstring error, and a slightly higher score.

If you’d like to know more about docstrings and commenting, there’s an in-depth RealPython tutorial on these and the different ways you can format them.

Ignoring Issues

We can instruct pylint to ignore any particular types of issues, which is useful if they are not seen as important or pedantic, or we need to see other types more clearly. For example, to ignore any unused imports:

Or, to disable all issues of type “warning”:

This can be particularly useful if we wish to ignore particularly pedantic rules, such as long line lengths over 100 characters.

Challenge

Edit the climate_analysis.py file and add in a comment

line that exceeds 100 characters. Then re-run pylint and determine the

issue identifier for this message, and re-run pylint again disabling

this specific issue.

OUTPUT

************* Module climate_analysis

climate_analysis.py:3:0: C0301: Line too long (111/100) (line-too-long)

climate_analysis.py:17:0: C0325: Unnecessary parens after '=' keyword (superfluous-parens)

climate_analysis.py:1:0: C0114: Missing module docstring (missing-module-docstring)

...We can see that the identifier is C0301, so:

However, if we wanted to ignore this issue for the foreseeable future, typing this in every time would be tiresome. Fortunately we can specify a configuration file to pylint which specifies how we want to interpret issues.

We do this by first using pylint to generate a default

.pylintrc file. It directs this as output to the shell, so

we need to redirect it to a file to capture it. Ensure you are in the

repository root directory, then:

If you edit this generated file you’ll notice there are many things

we can specify to pylint. For now, look for disable= and

add C0301 to the list of ignored issues already present

that are separated by commas, e.g.:

# no Warning level messages displayed, use "--disable=all --enable=classes

# --disable=W".

disable=C0301,

raw-checker-failed,

bad-inline-option,

locally-disabled,

file-ignored,

suppressed-message,

useless-suppression,

deprecated-pragma,

use-implicit-booleaness-not-comparison-to-string,

use-implicit-booleaness-not-comparison-to-zero,

use-symbolic-message-insteadEvery time you re-run it now, the C0301 issue will not

be present.

Using Pylint within VSCode

The good news is that if you’re using the VSCode IDE, we can also (or

alternatively) install a Python linter in VSCode to give us this code

analysis functionality, by installing the Pylint extension. Select the

Extensions icon and this time search for

Pylint, the one by Microsoft, and click

Install.

Going back to our code you should now find lots of squiggly underlines of various colours.

I don’t see any Squiggly Underlines!!

If you happen to not see any squiggly underlines in the editor, it

could be the linter extension hasn’t looked at your code yet. In order

to trigger the linter to show us further issues, try saving the file to

trigger the linter to do this. So go to File then

Save on the menu bar, and you should now see a lot of

squiggly underlines in the code.

These squiggly lines indicate an issue, and by hovering over them, we

can see details of the issue. For example, by hovering over the

variables shift or comment - we can see that

the variable names don’t conform to what’s known as an

UPPER_CASE naming convention. Simply, the linter has

identified these variables as constants, and typically, these are in

upper case. We should rename them, e.g. SHIFT and

COMMENT. But following this, we also need to update the

reference to comment in the code so it’s also upper case.

Now if we save the file selecting File then

Save, we should see the linter rerun, and those highlighted

issues disappear.

We can also see a comprehensive list of all the issues found, by

opening a code Problems window. In the menu, go to

View then Problems, and then you’ll see a

complete list of issues which we can work on displayed in the pane at

the bottom of the code editor. We don’t have to address them, of course,

but by following them we bring our code style closer to a commonly

accepted and consistent form of Python.

Summary

Code linters like pylint help us to identify problems in our code, such as code styling issues and potential errors, and importantly, if we work in a team of developers such tools help us keep our code style consistent. Attempting to understand a code base which employs a variety of coding styles (perhaps even in the same source file) can be remarkably difficult.

But there are some aspects we should be careful of when using linters and interpreting their results:

- They don’t tell us that the code actually works and they don’t tell us if the results our code produces are actually correct, so we still need to test our code.

- They don’t give us any Idea of whether it’s a good implementation, and that the technical choices are good ones. For example, this code contains functions to conduct temperature conversions, but it turns out there’s a number of well-maintained Python packages that do this (e.g. pytemperature)so we should be using a tried and tested package instead of reinventing the wheel.

- They also don’t tell us if the implementation is actually fit for purpose. Even if the code is a good implementation, and it works as expected, is it actually solving the intended problem?

- They also don’t tell us anything about the data the program uses which may have its own problems.

- A high score or zero warnings may give us false confidence. Just because we have reached a 10.00 score, doesn’t mean the code is actually good code, just that it’s likely well formatted and hopefully easier to read and understand.

So we have to be a bit careful. These are all valid, high-level questions to ask while you’re writing code, both as a team, and also individually. In the fog of development, it can be surprisingly easy to lose track of what’s actually being implemented and how it’s being implemented. A good idea is to revisit these questions regularly, to be sure you can answer them!

However, whilst taking these shortcomings into account, linters are a very low effort way to help us improve our code and keep it consistent.

- Use the

--reports yargument on the command line to Pylint to provide verbose reports - Instruct Pylint to ignore messages on the command line using the

--disable=argument followed by comman-separated list of message identifiers - Use

pylint --generate-rcfile > .pylintrcto generate a pre-populated configuration file for Pylint to edit to customise Pylint’s behaviour when run within a particular directory - Pylint can be run on the command line or used within VSCode

- Using Pylint helps us keep our code consistent, particularly across teams

- Don’t use Pylint feedback and scores as the only means to judge code quality

Content from Lesson 3: Intermediate Git

Last updated on 2025-05-29 | Edit this page

Overview

Questions

- What is a Git branch and why is it useful in collaborative development?

- When should I create a new branch in my project?

- What are the differences between fast-forward merge, 3-way merge, rebase, and squash and merge?

- How does Git handle merging when branches have diverged?

Objectives

- Understand the purpose and benefits of using Git branches, especially the feature branch workflow in collaborative projects.

- Compare Git merging strategies (fast-forward, 3-way merge, rebase, squash and merge) and understand when to use each.

- Gain familiarity with intermediate Git features, including cherry-picking, stashing, and resetting.

Basic Git training usually covers the essential concepts, such as adding files, committing changes, viewing commit history, and checking out or reverting to earlier versions. But for RSEs working in collaborative, code-intensive projects, that is just the tip of the iceberg. More detailed topics like branching and merging strategies, and understanding merge conflicts are critical for managing code across teams and maintaining clean, reproducible development workflows.

In this session we will explore branching and feature branch workflow, a popular method for collaborative development using Git, along with some intermediate Git features (merging, rebasing, cherry-picking) and handling merge conflicts that can help streamline your development workflow and avoid common pitfalls in collaborative software development.

Introduction to Feature Branch Workflow

Git Branches

You might be used to committing code directly, but not sure what

branches really are or why they matter? When you start a new Git

repository and begin committing, all changes go into a branch — by

default, this is usually called main (or

master in older repositories). The name main

is just a convention — a Git repository’s default branch can technically

be named anything.

Why not just always use main branch? While it is

possible to always commit to main, it is not ideal when

you’re collaborating with others, you are working on new features or

want to experiment with your code, and you want to keep main clean and

stable for your users and collaborators.

Feature Branch

Creating a separate branch (often called a “feature” branch) allows you to add or test code (containing a new “feature”) without affecting the main line of development, work in parallel with collagues without worrying that your code may break something for the rest of the team and review and merge changes safely after testing using pull/merge requests.

How do you decide when to use a new branch? You should consider starting a new branch whenever you are working on a distinct feature or fixing a specific bug. This allows you to collect a related set of commits in one place, without interfering with other parts of the project.

Branching helps separate concerns in your codebase, making development, testing, and code review much easier. It also reduces the chance of conflicts during collaborative work, especially when multiple people are contributing to the same repository.

This approach is known as the feature branch workflow. In this model, each new feature or fix lives in its own branch. Once the work is complete and has been tested, the branch is reviewed by project collaborators (other than the code author), any merge conflicts addressed and the new work merged back into the main branch. Using feature branches is an efficient way to manage changes, collaborate effectively, and keep the main branch stable and production-ready.

Introduction to Merging Strategies